A tech warning: AI is coming fast and it’s going to be rough ride

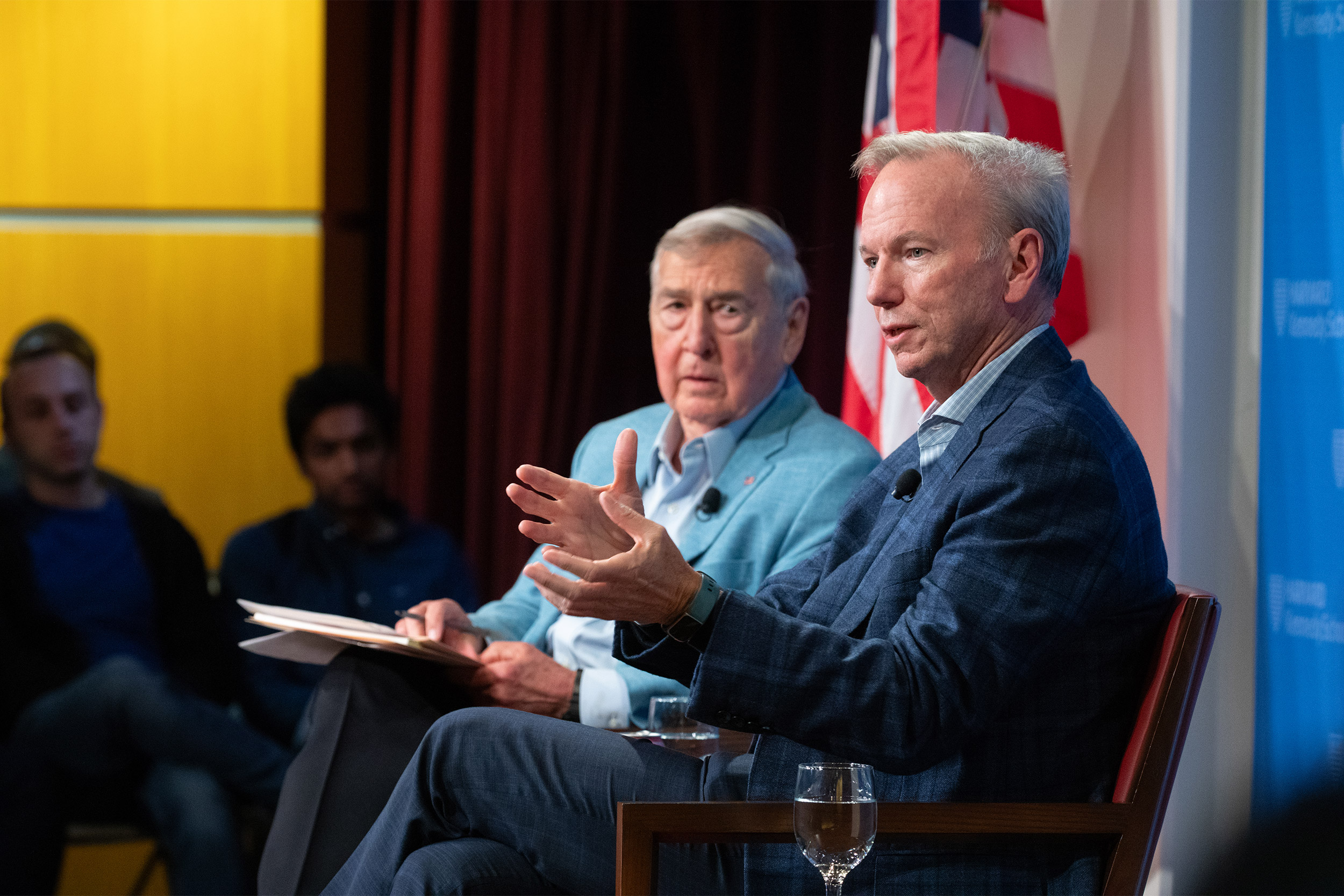

Eric Schmidt (right), the former CEO and chairman of Google, with Graham Allison at the JFK Forum on Wednesday.

Photo by Martha Stewart

Former Google chairman details disruptions, dangers technology will bring to economy, national security, other aspects of American life

ChatGPT’s writing and artistic capabilities offer the public a stunning preview of how far AI technology has already advanced and a hint of what’s to come. As AI evolves, the consequences for the U.S. economy, national security, and other vital parts of our lives will be enormous.

“It’s important for everybody to understand how fast this is going to change,” said Eric Schmidt, the former CEO and chairman of Google, during a conversation Wednesday evening with Graham Allison, Douglas Dillon Professor of Government at Harvard Kennedy School about what’s just over the horizon in AI. “It’s going to happen so fast. People are not going to adapt.”

The pace of improvements is picking up. The high cost of training AI models, which is time consuming, is coming down very quickly, and output quality will be far more accurate and fresher than it is today, said Schmidt.

But “the negatives are quite profound,” he added. AI firms still have no solutions for issues around algorithmic bias or attribution, or for copyright disputes now in litigation over the use of writing, books, images, film, and artworks in AI model training. Many other as yet unforeseen legal, ethical, and cultural questions are expected to arise across all kinds of military, medical, educational, and manufacturing uses.

Most concerning are the “extreme risks” of AI being used to enable massive loss of life if the four firms at the forefront of this innovation, OpenAI, Google, Microsoft, and Anthropic, are not constrained by guardrails and their financial incentives are “not aligned with human values,” said Schmidt, who served as executive chairman of Alphabet, Google’s parent company, from 2015 to 2018, and as technical adviser from 2018 to 2020 before leaving altogether.

Governments around the world will need to lead the way with regulations, but it will be “tough” to anticipate and prevent every possible future misuse of AI, especially open-source models available to all, or to expect every nation will be as committed to regulation as the West.

Positive changes will take longer to be felt in U.S. national security because of the federal government’s slow, multi-year procurement process and a resistance to the type of open-end and failure-driven approach to innovation common in the tech world.

“Soft power is going to be replaced by innovation power,” said Schmidt, referencing a concept about exerting non-military influence popularized by Joseph Nye, former Kennedy School dean and University Distinguished Service Professor Emeritus. “Future national security issues will be determined by how quickly you can innovate against the solution.”

The U.S. will soon need to grapple with moral and ethical questions about AI’s use during military campaigns, both in how to deploy it and defend against it. Current U.S. military law requires human control and oversight, but it’s not hard to envision a future in which an AI system decides which targets to hit and does so automatically.

“AI-enabled war is incredibly fast; you have to move very, very quickly. You don’t have time for a human in the loop,” said Schmidt, who served from 2016 to 2020 as chairman of the Defense Innovation Board, a federal advisory group that provides advice to top Pentagon officials started by the late Ash Carter, a former U.S. Defense Secretary and HKS faculty member.

In the global AI technology arms race, China was “late to the party.” They have capable scientists and engineers, but they’re still struggling to find enough Chinese language training data to advance the open-source models they’re using, though “they’re working on that,” said the tech billionaire.

China also lost access to the world’s most sophisticated computer chips when the Biden administration expanded curbs on tech exports to China last October, “so they’re having trouble keeping up” with the rapid innovation at U.S. firms. Still, while China is “a couple years behind” now, “they’re going to win” the AI race, predicted Schmidt. “They’re capable of doing this, and they’re coming.”

As the cost to train AI models becomes more affordable, there will be a “proliferation problem,” where all sorts of nations and groups will be able to create AI systems, some with deadly or disruptive intent.

Asked what the U.S. can do about adversaries like China conducting psychological and information warfare that’s been supercharged by AI, Schmidt was not hopeful.

“With a single computer, you can build an entire ecosystem, an entire set of worlds, and everyone’s narrative can all be different, but they can have an underlying manipulation theme — this is all possible today. It’s already out there,” he said. That means the 2024 elections in India, the U.S. and Europe are going to be awash with fake, AI-generated material and potentially “an unmitigated disaster.”

So what are some potential solutions?

One thing would be to try control the use of open source AI. In fact, Schmidt noted, some have mistakenly believed that the inability to gain access to vast datasets is what’s kept AI out of the hands of all, but a few companies.

“Data is not your problem. Your problem is talent and algorithms, which is a much easier game to win in open source,” said Schmidt, who encouraged Harvard students to figure out how to manage the proliferation of open source AI. “It’s an unsolved problem. I look forward to your results.”

Another measure would be to regulate social media companies, which are rewarded for driving up engagement and see upsetting users as a useful shortcut to that end, and to get them to come up with recommendations to address the problem. “And the way I would regulate it: Label the users, label the content, hold people responsible. We’re not doing that right now,” said Schmidt.

Eventually, the cyber marketplace will sort things out. In a world full of false material that’s promulgated by AI, there will be lots of AI that can detect the false stuff. “We will start to build economies around the whack-a-mole problem of the Good Guys AI staying slightly ahead of the Bad Guys most of the time — but not always. And some people will make some real money doing this.”