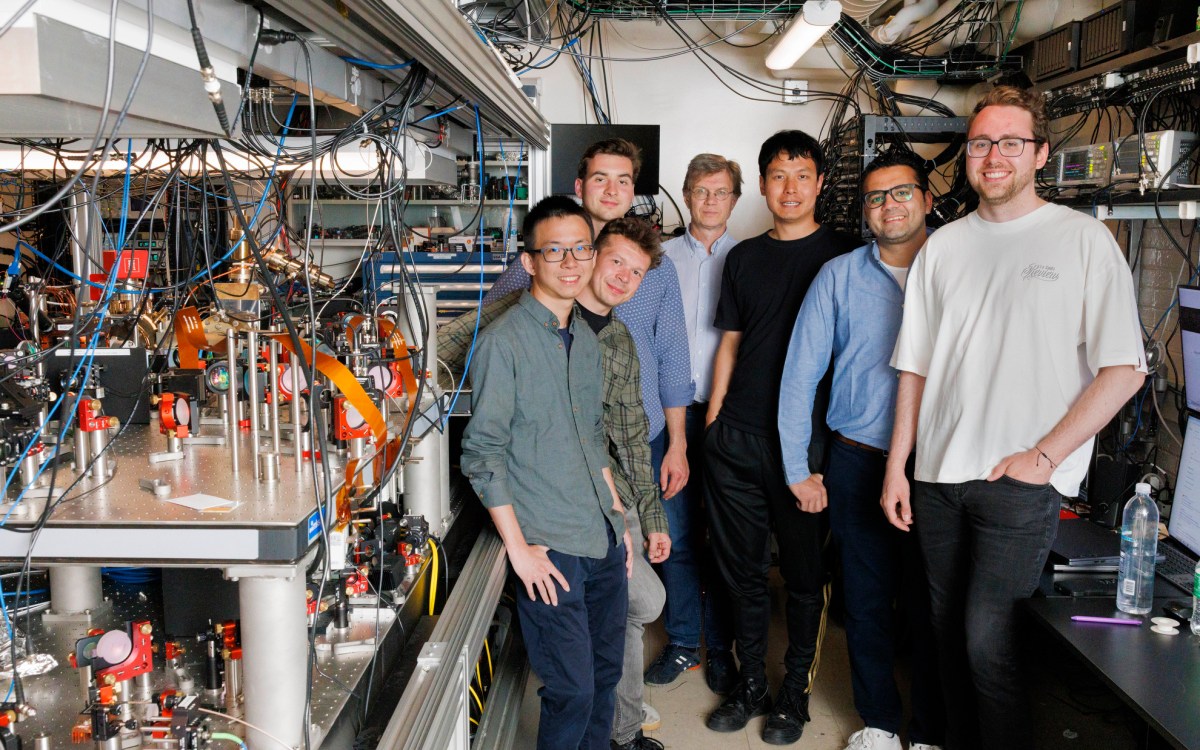

Kempner Institute for the Study of Natural and Artificial Intelligence will be headed by Bernardo Sabatini (right) and Sham Kakade.

Courtesy of Sham Kakade; Kris Snibbe/Harvard Staff Photo

New University-wide institute to integrate natural, artificial intelligence

Initiative made possible by gift from Priscilla Chan and Mark Zuckerberg

Harvard University on Tuesday launched the Kempner Institute for the Study of Natural and Artificial Intelligence, a new University-wide initiative standing at the intersection of neuroscience and artificial intelligence, seeking fundamental principles that underlie both human and machine intelligence. The fruits of discoveries will flow in both directions, enhancing understanding of how humans think, perceive the world around them, make decisions, and learn, thereby advancing the rapidly evolving field of AI.

The institute will be funded by a $500 million gift from Priscilla Chan and Mark Zuckerberg, which was announced Tuesday by the Chan Zuckerberg Initiative. The gift will support 10 new faculty appointments, significant new computing infrastructure, and resources to allow students to flow between labs in pursuit of ideas and knowledge. The institute’s name honors Zuckerberg’s mother, Karen Kempner Zuckerberg, and her parents — Zuckerberg’s grandparents — Sidney and Gertrude Kempner. Chan and Zuckerberg have given generously to Harvard in the past, supporting students, faculty, and researchers in a range of areas, including around public service, literacy, and cures.

“The Kempner Institute at Harvard represents a remarkable opportunity to bring together approaches and expertise in biological and cognitive science with machine learning, statistics, and computer science to make real progress in understanding how the human brain works to improve how we address disease, create new therapies, and advance our understanding of the human body and the world more broadly,” said President Larry Bacow.

“Priscilla Chan and Mark Zuckerberg have demonstrated a remarkable commitment to promoting discovery, innovation, and service at Harvard and other academic institutions around the United States,” said Bacow. “Their support for the creation of the Kempner Institute will advance Harvard’s education and research mission and is only the most recent way in which they have sought to do so. From supporting the study of COVID-19 treatments and advancing literacy research to boosting public service opportunities for undergraduates, they have shown a genuine commitment through their work at the Chan Zuckerberg Initiative and beyond to curing disease, improving lives, and encouraging others to serve.”

A focus of the institute will be training future generations of leaders and researchers in this area through funding dedicated to supporting undergraduate and graduate students as well as postdoctoral fellows. The institute, expected to open at the new Science and Engineering Complex in Allston by the end of 2022, is committed to the active recruitment of individuals from backgrounds traditionally underrepresented in STEM fields. It will be headed by Bernardo Sabatini, the Alice and Rodman W. Moorhead III Professor of Neurobiology at Harvard Medical School, and Sham Kakade, who in January will become a Gordon McKay Professor of Computer Science and Statistics at the Harvard John A. Paulson School of Engineering and Applied Sciences and a professor in the Faculty of Arts and Sciences. With a faculty steering committee made up of faculty across Schools and disciplines, the institute will convene experts in computer science, applied mathematics, neuroscience, cognitive science, statistics, and engineering.

Sabatini and Kakade, who is coming to Harvard from the University of Washington, spoke with the Gazette about the new effort and the promise and challenge presented by AI and the role it might play in all of our lives. Interview has been edited for length and clarity.

Q&A

Bernardo Sabatini and Sham Kakade

GAZETTE: Tell me about the new institute. What is its main reason for being?

SABATINI: The institute is designed to take from two fields and bring them together, hopefully to create something that’s essentially new, though it’s been tried in a couple of places. Imagine that you have over here cognitive scientists and neurobiologists who study the human brain, including the basic biological mechanisms of intelligence and decision-making. And then over there, you have people from computer science, from mathematics and statistics, who study artificial intelligence systems. Those groups don’t talk to each other very much.

We want to recruit from both populations to fill in the middle and to create a new population, through education, through graduate programs, through funding programs — to grow from academic infancy — those equally versed in neuroscience and in AI systems, who can be leaders for the next generation.

Over the millions of years that vertebrates have been evolving, the human brain has developed specializations that are fundamental for learning and intelligence. We need to know what those are to understand their benefits and to ask whether they can make AI systems better. At the same time, as people who study AI and machine learning (ML) develop mathematical theories as to how those systems work and can say that a network of the following structure with the following properties learns by calculating the following function, then we can take those theories and ask, “Is that actually how the human brain works?”

KAKADE: There’s a question of why now? In the technological space, the advancements are remarkable even to me, as a researcher who knows how these things are being made. I think there’s a long way to go, but many of us feel that this is the right time to study intelligence more broadly. You might also ask: Why is this mission unique and why is this institute different from what’s being done in academia and in industry? Academia is good at putting out ideas. Industry is good at turning ideas into reality. We’re in a bit of a sweet spot. We have the scale to study approaches at a very different level: It’s not going to be just individual labs pursuing their own ideas. We may not be as big as the biggest companies, but we can work on the types of problems that they work on, such as having the compute resources to work on large language models. Industry has exciting research, but the spectrum of ideas produced is very different, because they have different objectives.

GAZETTE: Is it by default going to be human intelligence that you’re studying, as opposed to some other animal intelligence?

SABATINI: Clearly one is interested in understanding human intelligence because it’s the most impressive among animals, but we will draw from the studies of any organism to gain insights into how intelligence works. The institute itself is going to be all dry lab, purely computational, but we will happily take data from animal studies. We will also have a collaborative grant program to seed directed studies and fund groups that we think will generate data crucial to understanding intelligence.

GAZETTE: You mention large-scale computing. Will that hardware require new systems being installed? Or are you going to use the existing infrastructure?

SABATINI: We’re going to buy a lot of GPUs [graphics processing units, that, like CPUs, power many computers] and also make use of cloud computing services.

GAZETTE: What about the institute’s faculty, both new and those already at Harvard working on these questions?

SABATINI: There’s great expertise already at Harvard, and one goal is to bring that expertise together. Basically, we want this institute to be a destination: If you care about intelligence, whether it’s natural or artificial, you should want to come to this institute. That will draw from Harvard and across the world. We have new faculty coming to Harvard who will be explicitly in this domain, who will then help recruit graduate students. We’re going to have professional engineering support on staff to help people translate ideas into code and into deployable solutions. There will also be a very impressive computational back end — hardware to be able to do sophisticated models in a way that is not common in academia. We will have programs by which people already at Harvard can have access to the compute structure and be involved in teaching and recruiting students, and our resources will be available to collaborative Harvard graduate programs, and so forth. We want the community to be as big as possible.

KAKADE: The institute has a pretty unique mission and a unique plan for how we’ll go about things, particularly for academia. Plenty of schools can hire more faculty, but here they’ll be able to do interdisciplinary work and have the resources — support for students, postdocs, engineers, and large-scale computing — to facilitate the program. This is hard to do in academia because we’re limited by grants, and it’s very hard to get sufficient computing resources.

GAZETTE: Why are the resources behind this gift so important?

KAKADE: A unique aspect of the institute is its scale. That allows us to address a number of factors: research, education, and computing. The educational side is not just graduate students; it’s postdocs down to undergraduates. We’re even thinking about having a post-baccalaureate program. The computing side is also remarkable for an academic institution. These algorithms are very data-hungry, and it’s increasingly becoming the case that only the biggest industrial labs can do such research, even though it’s really important to have academia involved to ask purely scientific questions. The institute is set up so that it isn’t just lots of money for computation. It’s engineering support, training people to understand how to build and support ML systems — the gift will greatly expand Harvard’s educational and research capacity in machine learning — and to help with the research pipeline. We will be able to do collaborative, large-scale research like you might at a big company. It will allow us to ask the types of questions that they ask there, but from a very different perspective. This is going to be pretty remarkable.

SABATINI: Some fields are very broad, and if you want to be excellent in that field, you have to be big. For example, my home department at HMS — neurobiology — spans everything from thinking about the structure of an ion channel to processes of cognition. You can’t have a small neurobiology program and be the best. This is a similar field. You need to have a certain mass, a certain breadth, and diversity of people in the institute in order to be on top. You want the only limitation to be ideas. If somebody has a brilliant idea, you want the infrastructure in place, the resources in place such that that idea can be translated into something real as quickly as possible. That requires scale, computing hardware, engineers, project management — all this is going to be essential in moving from idea to reality. The challenge is to build a research resource-deployment structure and a community structure: a place where everybody’s welcome, there are diverse backgrounds, and where we can be proactive in creating a diverse workplace and next generation. It also has to be a place where anyone can have an idea and receive positive reinforcement that helps it become reality.

GAZETTE: When we look ahead to the potential impact of those ideas, what areas of society are most ripe for the introduction of AI?

KAKADE: To a large extent, what’s been happening is that computers are getting better at the types of things people are good at, and we’re seeing tremendous impact across the board. Obviously, we’re seeing a lot of impact with regard to search, and speech recognition really helps people when they’re mobile and can ask questions about what’s near them. Information-gathering, information-processing with robotics is improving. Also, computer vision systems — on cars for example — are improving across the board. The excitement though, is about the potential to have a higher impact in the future. It’s also important that we’re aware of the pitfalls as our systems are improving. The institute’s focus will not be on directly deploying new technologies. Instead, the broader goal of the institute is discovering and increasing scientific knowledge, which we will pursue within ethical frameworks and with a desire to improve the world. Our work will help others advance a field that takes seriously the ethical concerns that have been raised by those both within and outside the field. Here, we will connect with relevant scholars and policymakers, both at Harvard and beyond, because these issues need to be considered in a broader context.

GAZETTE: When you say that excitement is building for a higher impact, are there particular areas people are most excited about?

KAKADE: Obviously, robotics and self-driving cars are moving rapidly. And natural-language processing is big, because the ability of automated systems to be able to generate text that resembles what a human might say is huge. If we can just improve question-answering, for example, that would be pretty incredible. You could ask things and receive answers in a reasonable manner. It’s obviously not all the way there yet, but the fact that language models are not completely gibberish and sometimes produce rich human language is pretty stunning.

GAZETTE: You’re talking about things like Siri, where you can ask a question and it’ll respond without a human being involved at all?

KAKADE: Siri is speech to text, but you can think as well of typing in questions on search and rather than getting back a website, you get the actual information, the answer you’re looking for. There is a growing interconnectedness for how we communicate with devices — Alexa, Siri, for example. Part of the excitement with the field’s progress in natural language models is the ability to have richer interactions both to communicate with each other, to obtain knowledge-based information, and for better interactions with voice-activated devices. That’s pretty exciting. There’s also an improving ability of automated systems to sense their environment, which is huge for smart homes and automated systems. Other areas are progressing also due to the ability of cameras to parse the visual world better, as in medical domains. The last couple of years have been remarkable for scene parsing: You take a picture, and it can understand the various objects in the picture and the relationships among them. More generally, the institute’s broader scientific and technological goals are to facilitate the development of methodologies that permit systems to interact with an environment the way a person might.

SABATINI: It’s important to note that the institute is not only about applications and advancing AI; there’s a fundamental part that is simply about discovering and increasing knowledge. Part of that discovery will be creating computational theories that can explain how our brains work. Once we understand how the brain “computes” and processes information, scientists will be able to eventually understand how those processes go wrong in disease. To do this we will draw from the computational theories that are being developed for understanding how AI works.

Second, any time an artificially intelligent agent has to interact with a human, it would be valuable for that agent to know something about the physical world and to have some common sense. We have common sense, and we expect AI to have common sense, but in general, it doesn’t. So I think that’s going to be a great advance.

Third are problems that we’re not even sure can be addressed. If we could create AI systems that are much larger and have, for example, access to all medical knowledge or all the structures of proteins, what kinds of new discoveries and intelligent conclusions could it make? Those discoveries could come at the level of understanding disease, discovering new therapies, or simply contributing to knowledge about how the world works. I don’t think we can dream of what those things are yet.

The steering committee for the institute: Boaz Barak (SEAS, computer science); Francesca Dominici (Harvard Chan School, biostatistics); Finale Doshi-Velez (SEAS, computer science); Catherine Dulac (FAS, molecular and cellular biology); Robert Gentleman (HMS, computational biomedicine/ biomedical informatics); Sam Gershman (FAS, psychology); Zak Kohane (HMS, biomedical informatics); Susan Murphy (SEAS/FAS, computer science/statistics); Venki Murthy (FAS, molecular and cellular biology); and Fernanda Viégas (SEAS/HBS, computer science).