Embedding ethics in computer science curriculum

Photo illustration by Judy Blomquist/Harvard Staff

Harvard initiative seen as a national model

Barbara Grosz has a fantasy that every time a computer scientist logs on to write an algorithm or build a system, a message will flash across the screen that asks, “Have you thought about the ethical implications of what you’re doing?”

Until that day arrives, Grosz, the Higgins Professor of Natural Sciences at the Harvard John A. Paulson School of Engineering and Applied Sciences (SEAS), is working to instill in the next generation of computer scientists a mindset that considers the societal impact of their work, and the ethical reasoning and communications skills to do so.

“Ethics permeates the design of almost every computer system or algorithm that’s going out in the world,” Grosz said. “We want to educate our students to think not only about what systems they could build, but whether they should build those systems and how they should design those systems.”

At a time when computer science departments around the country are grappling with how to turn out graduates who understand ethics as well as algorithms, Harvard is taking a novel approach.

In 2015, Grosz designed a new course called “Intelligent Systems: Design and Ethical Challenges.” An expert in artificial intelligence and a pioneer in natural language processing, Grosz turned to colleagues from Harvard’s philosophy department to co-teach the course. They interspersed into the course’s technical content a series of real-life ethical conundrums and the relevant philosophical theories necessary to evaluate them. This forced students to confront questions that, unlike most computer science problems, have no obvious correct answer.

Students responded. The course quickly attracted a following and by the second year 140 people were competing for 30 spots. There was a demand for more such courses, not only on the part of students, but by Grosz’s computer science faculty colleagues as well.

“The faculty thought this was interesting and important, but they didn’t have expertise in ethics to teach it themselves,” she said.

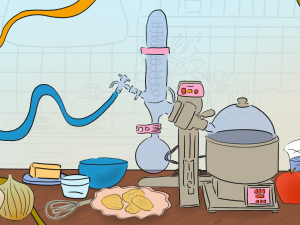

Barbara Grosz (from left), Jeffrey Behrends, and Alison Simmons hope Harvard’s approach to turning out graduates who understand ethics as well as algorithms becomes a national model.

Rose Lincoln/Harvard Staff Photographer

In response, Grosz and collaborator Alison Simmons, the Samuel H. Wolcott Professor of Philosophy, developed a model that draws on the expertise of the philosophy department and integrates it into a growing list of more than a dozen computer science courses, from introductory programming to graduate-level theory.

Under the initiative, dubbed Embedded EthiCS, philosophy graduate students are paired with computer science faculty members. Together, they review the course material and decide on an ethically rich topic that will naturally arise from the content. A graduate student identifies readings and develops a case study, activities, and assignments that will reinforce the material. The computer science and philosophy instructors teach side by side when the Embedded EthiCS material is brought to the classroom.

Grosz and her philosophy colleagues are at the center of a movement that they hope will spread to computer science programs around the country. Harvard’s “distributed pedagogy” approach is different from many university programs that treat ethics by adding a stand-alone course that is, more often than not, just an elective for computer science majors.

“Standalone courses can be great, but they can send the message that ethics is something that you think about after you’ve done your ‘real’ computer science work,” Simmons said. “We want to send the message that ethical reasoning is part of what you do as a computer scientist.”

Embedding ethics across the curriculum helps computer science students see how ethical issues can arise from many contexts, issues ranging from the way social networks facilitate the spread of false information to censorship to machine-learning techniques that empower statistical inferences in employment and in the criminal justice system.

Courses in artificial intelligence and machine learning are obvious areas for ethical discussions, but Embedded EthiCS also has built modules for less-obvious pairings, such as applied algebra.

“We really want to get students habituated to thinking: How might an ethical issue arise in this context or that context?” Simmons said.

“Standalone courses can be great, but they can send the message that ethics is something that you think about after you’ve done your ‘real’ computer science work.”

Alison Simmons, Samuel H. Wolcott Professor of Philosophy

Curriculum at a glance

A sampling of classes from the Embedded EthiCS pilot program and the issues they address

- Great Ideas in Computer Science: The ethics of electronic privacy

- Introduction to Computer Science II: Morally responsible software engineering

- Networks: Facebook, fake news, and ethics of censorship

- Programming Languages: Verifiably ethical software systems

- Design of Useful and Usable Interactive Systems: Inclusive design and equality of opportunity

- Introduction to AI: Machines and moral decision making

- Autonomous Robot Systems: Robots and work

David Parkes, George F. Colony Professor of Computer Science, teaches a wide-ranging undergraduate class on topics in algorithmic economics. “Without this initiative, I would have struggled to craft the right ethical questions related to rules for matching markets, or choosing objectives for recommender systems,” he said. “It has been an eye-opening experience to get students to think carefully about ethical issues.”

Grosz acknowledged that it can be a challenge for computer science faculty and their students to wrap their heads around often opaque ethical quandaries.

“Computer scientists are used to there being ways to prove problem set answers correct or algorithms efficient,” she said. “To wind up in a situation where different values lead to there being trade-offs and ways to support different ‘right conclusions’ is a challenging mind shift. But getting these normative issues into the computer system designer’s mind is crucial for society right now.”

Jeffrey Behrends, currently a fellow-in-residence at Harvard’s Edmond J. Safra Center for Ethics, has co-taught the design and ethics course with Grosz. Behrends said the experience revealed greater harmony between the two fields than one might expect.

“Once students who are unfamiliar with philosophy are introduced to it, they realize that it’s not some arcane enterprise that’s wholly independent from other ways of thinking about the world,” he said. “A lot of students who are attracted to computer science are also attracted to some of the methodologies of philosophy, because we emphasize rigorous thinking. We emphasize a methodology for solving problems that doesn’t look too dissimilar from some of the methodologies in solving problems in computer science.”

The Embedded EthiCS model has attracted interest from universities — and companies — around the country. Recently, experts from more than 20 institutions gathered at Harvard for a workshop on the challenges and best practices for integrating ethics into computer science curricula. Mary Gray, a senior researcher at Microsoft Research (and a fellow at Harvard’s Berkman Klein Center for Internet and Society), who helped convene the gathering, said that in addition to impeccable technical chops, employers increasingly are looking for people who understand the need to create technology that is accessible and socially responsible.

“Our challenge in industry is to help researchers and practitioners not see ethics as a box that has to be checked at the end, but rather to think about these things from the very beginning of a project,” Gray said.

Those concerns recently inspired the Association for Computing Machinery (ACM), the world’s largest scientific and educational computing society, to update its code of ethics for the first time since 1992.

In hope of spreading the Embedded EthiCS concept widely across the computer science landscape, Grosz and colleagues have authored a paper to be published in the journal Communications of the ACM and launched a website to serve as an open-source repository of their most successful course modules.

They envision a culture shift that leads to a new generation of ethically minded computer science practitioners.

“In our dream world, success will lead to better-informed policymakers and new corporate models of organization that build ethics into all stages of design and corporate leadership,” Behrends says.

The experiment has also led to interesting conversations beyond the realm of computer science.

“We’ve been doing this in the context of technology, but embedding ethics in this way is important for every scientific discipline that is putting things out in the world,” Grosz said. “To do that, we will need to grow a generation of philosophers who will think about ways in which they can take philosophical ethics and normative thinking, and bring it to all of science and technology.”

Carefully designed course modules

At the heart of the Embedded EthiCS program are carefully designed, course-specific modules, collaboratively developed by faculty along with computer science and philosophy graduate student teaching fellows.

A module that Kate Vredenburgh, a philosophy Ph.D. student, created for a course taught by Professor Finale Doshi-Velez asks students to grapple with questions of how machine-learning models can be discriminatory, and how that discrimination can be reduced. An introductory lecture sets out a philosophical framework of what discrimination is, including the concepts of disparate treatment and impact. Students learn how eliminating discrimination in machine learning requires more than simply reducing bias in the technical sense. Even setting a socially good task may not be enough to reduce discrimination, since machine learning relies on predictively useful correlations and those correlations sometimes result in increased inequality between groups.

The module illuminates the ramifications and potential limitations of using a disparate impact definition to identify discrimination. It also introduces technical computer science work on discrimination — statistical fairness criteria. An in-class exercise focuses on a case in which an algorithm that predicts the success of job applicants to sales positions at a major retailer results in fewer African-Americans being recommended for positions than white applicants.

An out-of-class assignment asks students to draw on this grounding to address a concrete ethical problem faced by working computer scientists (that is, software engineers working for the Department of Labor). The assignment gives students an opportunity to apply the material to a real-world problem of the sort they might face in their careers, and asks them to articulate and defend their approach to solving the problem.